Women under 60 can benefit from hormone therapy to treat hot flashes and other symptoms of menopause. That's according to a new study, and is a departure from what women were told in the past.

(Image credit: svetikd)

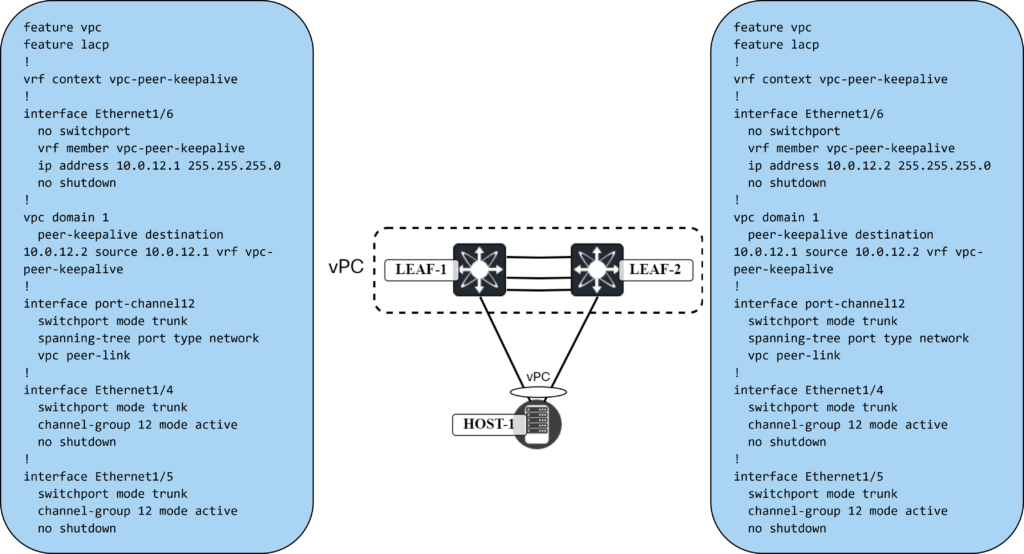

When building leaf and spine networks, leafs connect to spines, but leafs don’t connect to leafs, and spines don’t connect to spines. There are exceptions to this and vPC is one of those exceptions. The leafs that are going to be part of the same vPC need to connect to each other. There are two ways of achieving this:

- Physical interfaces.

- Fabric peering.

We will first use physical interfaces and then later remove that and use fabric peering. Now, my lab is virtual so take physical with a grain of salt, but they will be dedicated interfaces. The following will be required to configure vPC:

- Enable vPC.

- Enable LACP.

- Create vPC domain.

- Create a VRF for the vPC peer keepalive.

- Configure the interface for vPC peer keepalive.

- Configure the vPC peer keepalive.

- Configure the vPC peer link interfaces.

- Configure the vPC peer link.

This is shown below:

The vPC is now up:

Leaf1# show vpc

Legend:

(*) - local vPC is down, forwarding via vPC peer-link

vPC domain id : 1

Peer status : peer adjacency formed ok

vPC keep-alive status : peer is alive

Configuration consistency status : success

Per-vlan consistency status : success

Type-2 consistency status : success

vPC role : primary

Number of vPCs configured : 0

Peer Gateway : Disabled

Dual-active excluded VLANs : -

Graceful Consistency Check : Enabled

Auto-recovery status : Disabled

Delay-restore status : Timer is off.(timeout = 30s)

Delay-restore SVI status : Timer is off.(timeout = 10s)

Delay-restore Orphan-port status : Timer is off.(timeout = 0s)

Operational Layer3 Peer-router : Disabled

Virtual-peerlink mode : Disabled

vPC Peer-link status

---------------------------------------------------------------------

id Port Status Active vlans

-- ---- ------ -------------------------------------------------

1 Po12 up 1,10,20,100

Note that the following needs to be consistent when using vPC in VXLAN network:

- Same VLAN-to-VNI mapping on both peers.

- SVI present for VLANs mapped to VNI on both peers.

- VNIs need to use the same BUM mechanism such as multicast or ingress replication.

- If using multicast, both must use the same multicast group.

If the consistency check fails, NVE loopback interface is shutdown on switch in vPC secondary role.

Now let’s connect a host using vPC.

Setting up Bond and LACP on Linux Host

I’m using an Ubuntu host that we will use to setup the following:

- Create a YAML file for the network configuration to supply to Netplan.

- Bond two interfaces, ens192 and ens224, into a bond.

- Enable LACP on the interfaces.

- Configure the hashing policy to use both L3 and L4 information.

- Add a route for 198.51.100.0/24 via 10.0.0.1.

On my host, the YAML file is in /etc/netplan/:

server3:~$ cd /etc/netplan/ server3:/etc/netplan$ ls 01-network-manager-all.yaml

The file right now is very bare bones:

server3:/etc/netplan$ cat 01-network-manager-all.yaml # Let NetworkManager manage all devices on this system network: version: 2 renderer: NetworkManager

Before messing with system configuration it’s always good to take a backup:

server3:/etc/netplan$ sudo cp 01-network-manager-all.yaml 01-network-manager-all-backup.yaml server3:/etc/netplan$ ls 01-network-manager-all-backup.yaml 01-network-manager-all.yaml

Now we add the following YAML file:

network:

version: 2

renderer: NetworkManager

ethernets:

ens192:

dhcp4: no

ens224:

dhcp4: no

bonds:

bond0:

interfaces: [ens192, ens224]

addresses: [10.0.0.33/24]

routes:

- to: 198.51.100.0/24

via: 10.0.0.1

parameters:

mode: 802.3ad

transmit-hash-policy: layer3+4

mii-monitor-interval: 1

nameservers:

addresses:

- "8.8.8.8"

- "9.9.9.9"

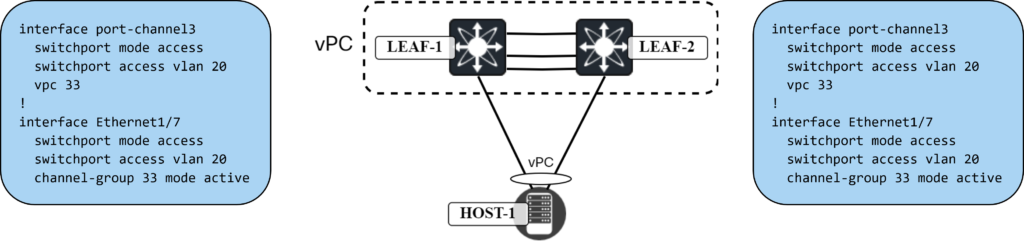

Before applying the configuration, I will add the vPC on LEAF1 and LEAF2. The commands needed are shown below:

Now let’s update the network configuration on the Ubuntu host:

server3:/etc/netplan$ sudo netplan apply

The vPC is now up on LEAF1:

Leaf1# show port-channel summary interface port-channel 33

Flags: D - Down P - Up in port-channel (members)

I - Individual H - Hot-standby (LACP only)

s - Suspended r - Module-removed

b - BFD Session Wait

S - Switched R - Routed

U - Up (port-channel)

p - Up in delay-lacp mode (member)

M - Not in use. Min-links not met

--------------------------------------------------------------------------------

Group Port- Type Protocol Member Ports

Channel

--------------------------------------------------------------------------------

33 Po33(SU) Eth LACP Eth1/7(P)

Leaf1# show lacp port-channel interface port-channel 33

port-channel33

Port Channel Mac=0-ad-e6-88-1b-8

Local System Identifier=0x8000,0-ad-e6-88-1b-8

Admin key=0x8021

Operational key=0x8021

Partner System Identifier=0xffff,82-8b-b6-cf-ba-df

Operational key=0xf

Max delay=0

Aggregate or individual=0

Member Port List=Eth1/7

The bond0 interface can now be seen on the host:

server3:/etc/netplan$ ip addr

<SNIP>

5: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 82:8b:b6:cf:ba:df brd ff:ff:ff:ff:ff:ff

inet 10.0.0.33/24 brd 10.0.0.255 scope global noprefixroute bond0

valid_lft forever preferred_lft forever

inet6 fe80::808b:b6ff:fecf:badf/64 scope link

valid_lft forever preferred_lft forever

We can get some more information using cat /proc/net/bonding/bond0:

cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v6.5.0-25-generic Bonding Mode: IEEE 802.3ad Dynamic link aggregation Transmit Hash Policy: layer3+4 (1) MII Status: up MII Polling Interval (ms): 1 Up Delay (ms): 0 Down Delay (ms): 0 Peer Notification Delay (ms): 0 802.3ad info LACP active: on LACP rate: slow Min links: 0 Aggregator selection policy (ad_select): stable Slave Interface: ens192 MII Status: up Speed: 10000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:50:56:ad:4f:d3 Slave queue ID: 0 Aggregator ID: 1 Actor Churn State: none Partner Churn State: none Actor Churned Count: 0 Partner Churned Count: 0 Slave Interface: ens224 MII Status: up Speed: 10000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:50:56:ad:40:5d Slave queue ID: 0 Aggregator ID: 1 Actor Churn State: none Partner Churn State: none Actor Churned Count: 0 Partner Churned Count: 0

One interesting thing to note is that after enabling the bond interface, the individual interfaces ens192 and ens224 now show the same MAC address as the bond0 interface:

server3:/etc/netplan$ ip link

<SNIP>

3: ens192: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc fq_codel master bond0 state UP mode DEFAULT group default qlen 1000

link/ether 82:8b:b6:cf:ba:df brd ff:ff:ff:ff:ff:ff permaddr 00:50:56:ad:4f:d3

altname enp11s0

4: ens224: <BROADCAST,MULTICAST,SLAVE,UP,LOWER_UP> mtu 1500 qdisc fq_codel master bond0 state UP mode DEFAULT group default qlen 1000

link/ether 82:8b:b6:cf:ba:df brd ff:ff:ff:ff:ff:ff permaddr 00:50:56:ad:40:5d

altname enp19s0

5: bond0: <BROADCAST,MULTICAST,MASTER,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 82:8b:b6:cf:ba:df brd ff:ff:ff:ff:ff:ff

With vPC configured, in the next blog post we’ll verify connectivity.

Edge of the Horsehead Nebula. All images courtesy of NASA/ESA/CSA

Focused on the part of the sky where you can spot the constellation Orion (“The Hunter”) on clear nights, the James Webb Space Telescope’s latest dispatch blinks in astonishing images from an area known as the Orion B molecular cloud.

At 1,300 light-years away—more than 7.8 quadrillion miles from Earth—the cloud is the closest star-forming region to our solar system. And rising from the turbulent field of gas and dust is Barnard 33, commonly known as the Horsehead Nebula. The gradually collapsing, interstellar cloud is more than 5.8 billion miles tall, composed of material illuminated by a hot star nearby. Over millions of years, the gas clouds surrounding the formation have dissipated, but the pillar remaining today is composed of thick clumps that are harder to erode, providing scientists with a perfect subject for study.

Webb zoomed in on the top crest of the nebula to capture the region’s complexity with never-before-seen spatial resolution. Using imagery from the telescope’s MIRI and NIRCam instruments, an international team of astronomers has revealed small-scale structures along the illuminated edge of the Horsehead for the first time.

In addition to photographs, the team has released a mesmerizing gyroscopic video, taking us on a journey through the light-years in just under two minutes and providing a sense of the enormous scale and distance Webb documents. Explore more of Webb’s missions on NASA’s website.

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article The James Webb Space Telescope Reveals Details of the Horsehead Nebula in Unprecedented Resolution appeared first on Colossal.