Something is coming. You can feel it if you talk to enough engineering leaders.

A quiet tension. A sense that AI isn’t just a new tool — it’s a structural shift.

A force that will reshape how we build, how we organize, and who we need on our teams.

But the conversation is often… shallow.

People keep asking “Will AI replace engineers?” or “Will it make teams 10x faster?” — as if output alone decides the future.

I think the real story is different.

The Myth of Faster Teams

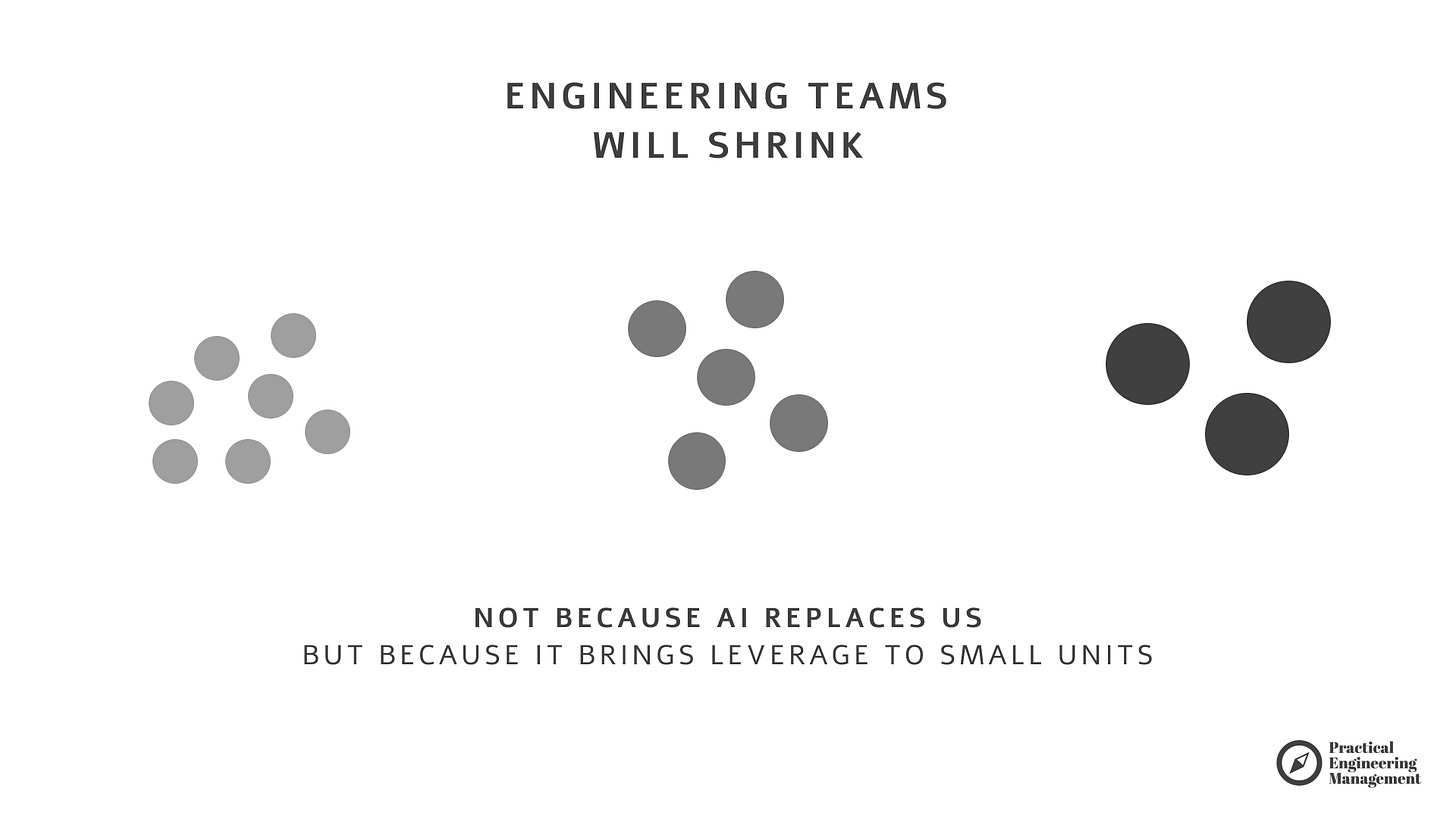

Many leaders I speak with believe AI will make large teams go faster.

If we have 100 engineers today, we’ll deliver twice as much tomorrow.

But I’m on the opposite side of this debate.

Because speed itself is no longer the bottleneck.

Validation, discovery, and maintenance are.

We can already generate code at near-light speed.

That part is solved.

But just as anything nearing the speed of light experiences relativistic effects — time dilation, mass increase, and rising energy requirements — teams approaching “AI-assisted velocity” feel mounting forces of their own: more validation, more maintenance, more coordination load.

Faster creation → exponentially faster accumulation of responsibility.

And that pressure doesn’t scale linearly with the size of the team.

The Hidden Geometry of Overhead

Every engineer added to a team introduces new communication pathways.

The formula is simple:

Connections = N × (N – 1) / 2

This is why large teams swim in meetings, alignment docs, JIRA gymnastics, and endless “syncs.”

We built processes to compensate for scale — not because they are good, but because they are necessary when 100 people must row in the same direction.

But if AI gives us the ability to do more with fewer people, then shrinking teams isn’t just possible — it becomes strategically beneficial.

Simpler orgs.

Fewer communication lines.

Higher clarity.

Better focus.

For the first time in decades…

we can optimize for small.

Why It Hasn’t Happened Yet

A fair question people ask:

“If AI makes teams smaller, why didn’t we see it in 2024–2025?”

Because we’re still terrible at using AI well.

Many teams tried.

Most produced chaos:

More output, worse quality

Faster changes, higher defect rates

Systems built fast… maintained slowly

During a 1:1 with one of my team's principal engineers, I discussed this challenge: juniors have already started using AI to generate code. It works and meets functional requirements. But it’s not scalable, full of gaps (security, architecture, etc.). Ultimately, it’ll lead us towards writing better specs, but for now… the engineer I talked to spends hours doing the code review.

Research confirms this: AI boosts output but also magnifies mess when teams don’t control the process.

We failed not because AI is bad.

We failed because we haven’t yet mastered how to drive it.

But some individuals have figured it out.

They can:

One engineer, guided by AI, now delivers what previously required a small team.

It’s not widespread yet.

But it’s real.

Which means 2026 won’t be about “AI generating more.”

It will be about companies discovering they no longer need as many people to build the same amount of product.

The Real Future: Smaller Teams, Larger Impact

Marty Cagan, one of the influential product leaders, says:

The current generative AI-based tools are helping to improve the productivity of engineers by something in the range of 20-30%. (…) But in practice, it means that where you might have had 8 engineers on a product team, now you might have 5 or 6.

As AI matures and workflows stabilize, here’s what’s coming:

A backend engineer can generate solid UI.

A frontend engineer can scaffold backend services.

A full-stack engineer can prototype an entire product — alone.

A single team can cover what once required a full department.

Not because humans became superhuman.

But because the surface area of what one person can command expands when AI does most of the mechanical lifting.

This shifts the bottleneck.

The challenge won’t be building features.

It will be:

This is where engineering leaders must re-anchor.

So What Should Engineers and Leaders Do Now?

If teams shrink — and they will — how do you stay indispensable?

Here’s the simple truth:

The people who thrive will be the ones who stop thinking of themselves as “coders”… and start thinking as “problem-solvers for the business.”

Let’s break it down.

1. Become Product-Oriented, Not Ticket-Oriented

Ask:

If you can’t answer these, AI can’t help you — because you’re optimizing for output, not outcomes.

Engineers who understand the product context rise.

Those who don’t will be replaced by cheaper automation.

For a simple exercise about solving the right problems, have a look at the Problem Solving Framework.

2. Own the Full Lifecycle

Not just building.

But:

AI amplifies creation.

Humans must amplify selection and judgment.

3. Learn to Drive AI With Clarity

The winners will be those who know how to:

This is a skill.

It’s not magic.

And it will separate professionals from button-clickers.

If you want to be better in driving AI, have a look on my book: From Engineers to Operators – AI Strategy Workbook for Engineering Leaders.

Free preview | Full book

4. Strengthen Cross-Functional Collaboration

Future teams look like micro product pods:

AI handles execution.

Humans handle intention and direction.

If you can speak fluently with PMs and designers, you’re future-proof.

If you can’t… now is the time to learn. Product Engineering Manifesto can help you with that.

Final Thoughts: The Profession Is Changing — But Not Dying

2026 won’t kill engineering.

It will kill the old model of engineering:

What will survive — and thrive — are leaders and engineers who:

understand the business

love solving real problems

shape products, not just code

use AI as leverage, not as a crutch

build small, focused, outcome-driven teams

The future belongs to designers of systems, not just writers of code.

And if you lean into that shift now,

you won’t just survive the shrinking of teams —

you’ll be the one people want to keep when the shrinking begins.